The development of applications and integrations based on Artificial Intelligence (AI) is becoming increasingly common—whether in the form of AI-powered products or internal projects that use AI to automate or optimize corporate processes. However, as with any emerging technology, it is crucial to understand the potential vulnerabilities these solutions can introduce into your organization's technology ecosystem, especially depending on the type of information handled within these systems.

At 7 Way Security (7WS), we’ve been working to identify security vulnerabilities associated with AI technologies. Our goal is to provide actionable insights that help your organization take a proactive approach to defending its digital assets. Below, we outline some of the most common risks and vulnerabilities associated with AI-based solutions.

Prompt Injection in Large Language Models (LLMs)

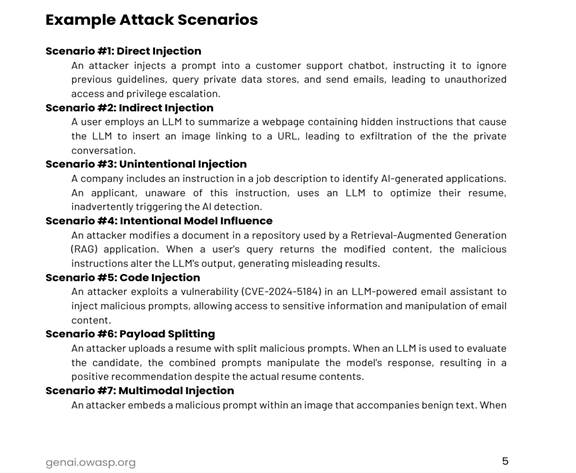

Prompt injection is a technique used by attackers to manipulate a Large Language Model (LLM) without needing direct access to its code. It involves crafting specific inputs that trick the model into behaving in unintended or malicious ways. According to the OWASP Top 10 for LLM Applications 2025 , this vulnerability ranks as the number one risk.

There are two types of prompt injection:

• Direct Injection: The attacker interacts directly with the model by entering malicious prompts.

• Indirect Injection: Malicious prompts are embedded in external sources—like websites or documents—that the model later processes.

Exploiting these vulnerabilities can allow unauthorized access to internal execution context, data exfiltration, or task manipulation. Such attacks have already been reported in production systems, especially chatbots, sometimes even in compliance with customer requests.

Training models with Sensitive Data

Poorly trained models—or those trained using unfiltered, sensitive data—can leak confidential information. At 7WS, we noted a study conducted by researchers at the University of Massachusetts Amherst and the University of Massachusetts Lowell, which demonstrated the possibility of extracting personal data from models trained with real clinical records.

Reference: https://arxiv.org/pdf/2104.08305

This type of attack is known as a “membership inference attack”, in which an adversary can determine whether a specific individual's data was used during the model's training.

This highlights a crucial point: even though AI offers tremendous benefits in data analysis, organizations must also address the privacy and security concerns tied to the use of personal information in AI training processes.

It’s worth asking: What data are we feeding into these models? Are free or public AIs potentially being trained with sensitive information we provide? Could that data be compromised later on?

Supply chain vulnerabilities

As with traditional software development, the components used to build AI systems can introduce security risks. Failure to verify the integrity and security of third-party libraries, models, and tools may expose the application to vulnerabilities.

In the context of Machine Learning, this extends to pre-trained models and the data they were trained on. That’s why OWASP ranks third-party risks as a major concern in its LLM Top 10. It’s difficult to assess the security of every component used in an AI application’s supply chain.

For instance, a vulnerable Python library, a pre-trained model from an untrusted source, or a compromised third-party model using LoRA (Low-Rank Adaptation), such as the incident involving Hugging Face, underscore the importance of validating supply chain security in AI-based development.

Common vulnerabilities in AI integrations

Some frequently encountered weaknesses in AI integrations include:

• Unprotected HTTP communication: Many LLM integrations rely on APIs that transmit data without encryption, enabling man-in-the-middle attacks and unauthorized access to information.

• SQL injection through prompts: Instead of a typical input field, attackers may exploit prompts to inject queries, especially if the backend doesn’t handle variables securely. This risk becomes more prominent as LLMs are integrated into more systems.

• Exposed secrets in code: It’s common to find API tokens, database credentials, or endpoints hardcoded in integration scripts, as they’re needed for communication between services. This presents a major security risk.

• Improper permission handling: If tenant isolation is not enforced, an attacker could gain access to data from other users or accounts by exploiting prompt injection and poor access control mechanisms.

Unrestricted consumption

Another underexplored risk involves unrestricted usage or resource consumption.

This category includes several threats, such as:

- Variable-length input flooding: Attackers send large inputs to overwhelm the model.

- “Denial of Wallet” attacks: Attackers generate excessive requests to incur high cloud usage charges in pay-per-use AI models.

- Continuous input overflow: Constant, excessive resource consumption leads to service degradation or operational failure.

- High-resource queries: Specially crafted prompts that require intense computation, causing system overload.

- Model cloning through behavior replication: By systematically querying the model, attackers can reconstruct and train a similar model using the original’s outputs.

These attacks can lead to financial loss, service disruption, or intellectual property theft. Some mitigation strategies include:

• Entry validation

• Rate limiting

• Sandboxing of interactions

• Monitoring resource thresholds

Final Thoughts

While this article focuses on some of the most common AI and LLM-related vulnerabilities, our 7WS Red Team and pentesters are equipped to test for the full OWASP Top 10 for LLMs and other emerging risks. Many organizations are adopting AI solutions—but are you sure none of the vulnerabilities described here exist in your current or planned AI integrations?